Demystifying Ankadrochik reveals it as a flexible working methodology that combines modularity, outcome-focused design, and observable systems. Rather than a rigid framework, it’s a set of principles for building composable components, measuring user value, and maintaining standards across teams. The core philosophy emphasizes clarity, feedback loops, and practical results over complexity.

When you search for “ankadrochik” across industry forums and documentation, you’ll notice something unusual: there’s no official definition. That’s intentional. The term functions as a mindset rather than a prescribed system, making demystifying Ankadrochik the first step toward understanding how teams actually use it in practice.

What Makes Ankadrochik Different?

At its core, demystifying Ankadrochik starts by accepting that it’s not a software framework, platform, or branded methodology. Instead, it’s a philosophy for how work gets organized—one that prioritizes building things in small, reusable pieces and measuring what actually matters to users. Organizations adopt the label when they want to signal that they operate with clarity, modularity, and real feedback loops.

The reason people struggle to pin down ankadrochik is that it has no fixed definition on Wikipedia or in academic texts. This blank slate is the feature, not a bug. It allows teams, startups, and enterprises to apply its principles to their own context while keeping the focus on what’s measurable and valuable.

The Four Pillars of Ankadrochik

Understanding and demystifying Ankadrochik requires examining its four foundational principles. These aren’t rigid rules but rather habits that separate teams using this approach from those working without clear standards.

Modularity as Your Foundation

The first principle treats every component—whether a feature, service, or process—as a discrete, replaceable unit. When you build modularly, you reduce the blast radius of changes. A bug in one module doesn’t cascade through your entire system. Teams can work in parallel without stepping on each other’s toes. Instead of one monolithic release every quarter, you enable incremental updates that feel safer to ship.

Practical tools for modularity include API contracts (OpenAPI or GraphQL schemas), feature flags that let you toggle functionality, and versioning strategies that prevent breaking changes from surprising consumers downstream.

User Outcomes Over Output Volume

Here’s where demystifying Ankadrochik separates from older management approaches: it measures success by what users gain, not how many features you shipped. Instead of tracking “lines of code written” or “tasks completed,” you define a single outcome per iteration—something testable and tied to user behavior. Does activation increase? Does time-to-value shrink? Does retention improve for the affected segment?

This shift forces clarity. Vague goals disappear. Instead of “improve performance,” you ask “reduce page load time from 4s to 2s for mobile users in North America.” The specificity makes learning possible.

Observability Baked Into Design

If you can’t see what’s happening, you can’t improve it. Teams practicing ankadrochik instrument their systems from day one. That means structured logging, distributed tracing, and dashboards that show leading indicators (what users are doing right now) rather than just lagging ones (what happened last quarter).

This principle applies beyond engineering. Product teams log decision-making rationale in decision archives. Marketing teams instrument campaigns with user session tracking. Support teams track ticket patterns to identify systemic friction. Observability creates the feedback loop that lets you validate assumptions fast.

Standards and Automation as Friction Reducers

Complexity compounds. Teams using Ankadrochik fight it with shared standards and automation that enforces them. This includes API naming conventions, release processes, access controls, and code templates. When you standardize, you stop reinventing wheels. Engineers spend less time debating “how do we structure this?” and more time solving user problems.

Automation is the ally here: CI pipelines enforce your standards automatically, schema validators catch contract violations before they become bugs, and scaffolding CLIs eliminate repetitive setup work.

How Ankadrochik Works in Practice

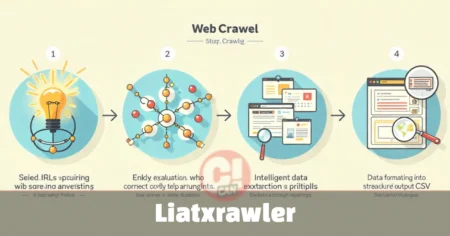

Demystifying Ankadrochik becomes concrete when you see the workflow. Most teams following this approach move through four stages with each project or initiative.

Start by clarifying the problem and baseline. What pain are users experiencing? What’s the current behavior? Define a measurable outcome and the metrics that will prove you’ve succeeded. Then compose your solution using existing components before building anything new. Write interface contracts before code. Design the smallest possible change that could validate your hypothesis.

Next, deliver that thin slice behind a flag. Automate tests that verify your contracts work. Roll out gradually to a small cohort first. Finally, close the loop by comparing your actual results to the outcome you predicted. Keep what works, iterate on what doesn’t, and feed these learnings back into your standards and templates.

This cycle typically runs weekly or biweekly, creating rapid feedback. That rhythm is what separates demystifying Ankadrochik teams from those moving slowly.

Avoiding Common Pitfalls

Organizations sometimes stumble when they misapply Ankadrochik principles. The first mistake is building infrastructure before real usage justifies it. You’re not building a “platform”—you’re solving a user problem with the smallest, most observable change possible. Growth of standards comes later.

Another trap is forking your standards with one-off exceptions. When you permit special cases without documenting why, standards erode fast. Instead, establish a lightweight RFC process: if you need an exception, write it down, get agreement, and update the standard.

A third pattern to avoid is tracking what’s easy to measure instead of what matters. It’s simple to count deployments or code coverage, but those don’t reflect user value. Ankadrochik teams discipline themselves to measure outcomes: activation, time-to-value, retention, and safety metrics like change failure rate and mean time to recovery.

Starting Your Demystifying Ankadrochik Journey

You don’t need to rebuild everything overnight. Pick one area where users face clear friction. Define your target outcome and baseline metrics. Adopt two standards—perhaps how you document APIs and how you release code—and publish them widely. Build your first solution, reusing as much as possible. Add observability to the core path. Release to a small group. Compare results to expectations. Decide quickly whether to expand, iterate, or revert.

That one-week sprint gives you proof that the approach works and builds momentum for scaling it.

Why Demystifying Ankadrochik Matters Now

In 2025, teams competing on speed and reliability need methods that reduce waste and tighten feedback loops. Demystifying Ankadrochik provides a vocabulary and set of practices that organizations at any stage can adopt. Startups use it to scale without becoming chaotic. Enterprises use it to move faster without sacrificing stability.

The reason it lacks a formal definition isn’t a weakness—it’s exactly what makes it adaptable. Each team shapes Ankadrochik to their context while keeping the fundamentals: compose, observe, standardize, and let user signals guide the roadmap. That discipline is the real practice behind the curious name.